Login to Artifactory server

Click on Tools--> User Management --> Users

Click on New user

Create an admin user

Make sure you follow password policy as mentioned. Click on Save

that's it..that is how you create an admin user in Artifactory.

Hands on DevSecOps Coaching that is provided on AWS and Azure Cloud platforms. Contact Coach AK at devops.coaching@gmail.com for more info. You can also reach out to Coach AK at +1(469) 733-5248

Login to Artifactory server

Click on Tools--> User Management --> Users

Click on New user

Create an admin user

Make sure you follow password policy as mentioned. Click on Save

that's it..that is how you create an admin user in Artifactory.

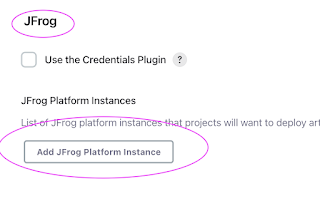

How to Integrate Artifactory with Jenkins?

You can install plug-in called Artifactory plug-in to integrate Artifactory with Jenkins. Let us see how to integrate Jenkins with Artifactory and able to upload any binary file such as War/Ear/Jar/Exe/DLLs from Jenkins.

How to setup Artifactory using Docker Compose?

Building pipelines in Azure DevOps is really easy, you can migrate your web applications from any where into Azure Cloud by using Azure pipelines.

Pre-requisites:

Create Azure Build YAML pipeline in Azure DevOps

Login to Azure DevOps organization, go to your project dashboard.

Click on Pipelines --> new pipeline

You can use a personal access token (PAT) as an alternate password to authenticate into Azure DevOps.

A personal access token(PAT) contains your security credentials for Azure DevOps. A PAT identifies you, your accessible organizations, and scopes of access. As such, they're as critical as passwords, so you should treat them the same way.

Create Personal Access Token

2. Click on User settings, Select personal access tokens

3. Click on New TokenFor the scope select Agent Pools (read, manage) and make sure all the other boxes are cleared.

5. Now copy the token and secure in a place where you can refer it. We will use this when configuring build agent in Azure DevOps

Let us learn how to create and configure a Self-Hosted Agent in Azure DevOps (ADO).

What is an Agent?

An agent is computing infrastructure with installed agent software that runs one job at a time.

To build your code or deploy your software using Azure Pipelines, you need at least one agent. As you add more code and people, you'll eventually need more.

When your pipeline runs, the system begins one or more jobs.

In Azure pipelines, there are two types of build agents:

🚀DevSecOps Bootcamp - Master Security in DevOps | July 2025 Are you ready to supercharge your career in DevSecOps ? Whether you'r...