Amazon

ECR uses Amazon S3 for storage to make your container images highly

available and accessible, allowing you to reliably deploy new containers

for your applications. Amazon ECR transfers your container images over

HTTPS and automatically encrypts your images at rest. Amazon ECR is

integrated with Amazon Elastic Container Service (ECS), simplifying your

development to production workflow.

Pre-requisites:

- Ec2 instance up and running with Docker installed

- Make sure you open port 8081

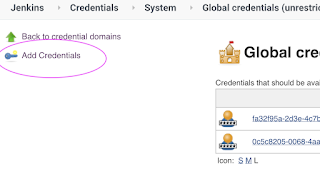

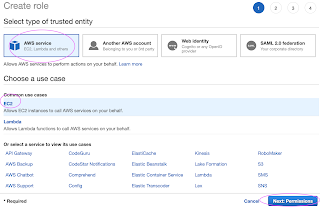

Step 2- Create an IAM role

You need to create an IAM role with

Now search for

Now give a role name and create it.

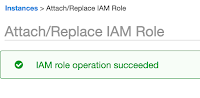

Step 3 - Assign the role to EC2 instance

Go to AWS console, click on EC2, select EC2 instance, Choose instance setting.

Click on Attach/Replace IAM Role

Choose the role you have created from the dropdown.

Select the role and click on Apply.

Now Login to EC2 instance where you have installed Docker. You must be able to connect to AWS ECR through AWS CLI which can be installed by

sudo apt install awscli -y

Once AWS CLI is installed, you can verify the installation:

aws --version

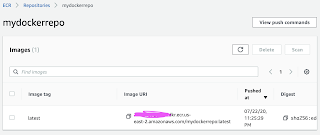

Where your_acct_id is from AWS ECR in the above picture.

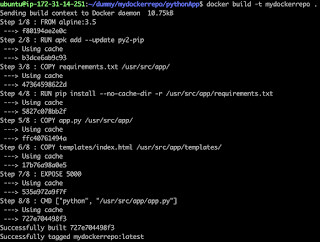

You must get a message says Login succeeded. Now let's build a docker image, I have already created a public repo in Bitbucket. All you need to do is perform the below command to clone my repo:

git clone https://bitbucket.org/ananthkannan/mydockerrepo; cd mydockerrepo/pythonApp

docker build . -t mypythonapp

the above command will build a docker image.

Now tag Docker image you had build

docker tag mypythonapp:latest your_acct_id.dkr.ecr.us-east-2.amazonaws.com/your-ecr-repo-name:latest

You can view the image you had built.

docker push your_acc_id.dkr.ecr.us-east-2.amazonaws.com/your-ecr-repo-name:latest

Now you should be able to login to ECR and see the images already uploaded.

sudo docker run -p 8081:5000 --rm --name myfirstApp1 your_acc_id.dkr.ecr.us-east-2.amazonaws.com/your-ecr-repo-name